Fabien Tarissan, Lionel Tabourier

In Proceedings of the 8th International Conference on Complex Networks and their Applications, Lisbonne, Portugal, 2019

We are interested in all aspects of real world networks and their models, from internet measurements to random graphs, from social network analysis to spreading phenomena, and from graph algorithms to biological networks.

Fabien Tarissan, Lionel Tabourier

In Proceedings of the 8th International Conference on Complex Networks and their Applications, Lisbonne, Portugal, 2019

Vincent Gauthier

Mardi 19 novembre 2019, Ă 14h, en salle 26-00/332, Jussieu

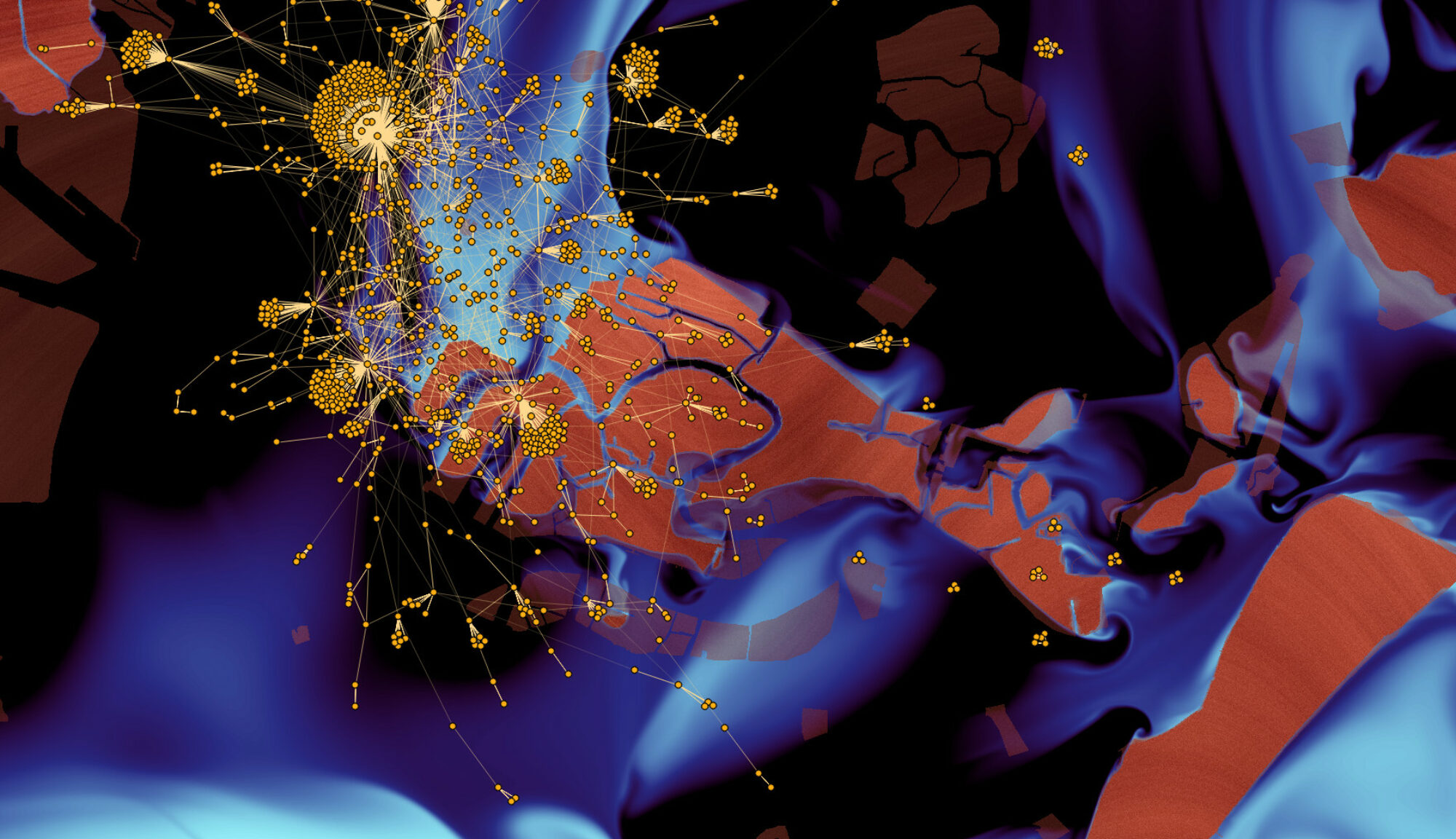

During recent years, the study of population dynamics from mobile traffic data has proven to offer rich insights into human mobility laws, disaster recovery, infective disease epidemics, commuting patterns, urban planning, measurement of air pollution in cities, and measurement of energy consumption of cities. These studies have demonstrated how data collected by mobile network operators can effectively complement, or even replace, traditional sources of demographic data, such as censuses and surveys. We present here a series of works that we developed method to extract mobility information from mobile phone data aim for public transport authorities. With the first study, we developed an unsupervised algorithm that enables the mapping of mobile phone traces over a multimodal transport network. One of the main strengths of our work was its capability to map noisy sparse cellular multimodal trajectories over a multilayer transportation network where the layers have different physical properties and not only to map trajectories associated with a single layer. In a second study, we proposed a new approach to infer population density at urban scales, based on aggregated mobile network traffic metadata. Our approach allowed estimating both static and dynamic populations, achieved a significant improvement in terms of accuracy with respect to state-of-the-art solutions in the literature and was validated on different city scenarios.

Pedro Ramaciotti Morales, Lionel Tabourier, Sylvain Ung, and Christophe Prieur.

In Proceedings of the 30th ACM Conference on Hypertext and Social Media, pp. 133-142. ACM, 2019.

The quantitative measurement of the diversity of information consumption has emerged as a prominent tool in the examination of relevant phenomena such as filter bubbles. This paper proposes an analysis of the diversity of the navigation of users inside a website through the analysis of server log files. The methodology, guided and illustrated by a case study, but easily applicable to other cases, establishes relations between types of users’ behavior, site structure, and diversity of web browsing. Using the navigation paths of sessions reconstructed from the log file, the proposed methodology offers three main insights: 1) it reveals diversification patterns associated with the page network structure, 2) it relates human browsing characteristics (such as multi-tabbing or click frequency) with the degree of diversity, and 3) it helps identifying diversification patterns specific to subsets of users. These results are in turn useful in the analysis of recommender systems and in the design of websites when there are diversity-related goals or constrains.

Pierluigi Crescenzi, Clémence Magnien and Andrea Marino

Algorithms 2019, 12(10), 211 (Special Issue Algorithm Engineering: Towards Practically Efficient Solutions to Combinatorial Problems)

Temporal networks are graphs in which edges have temporal labels, specifying their starting times and their traversal times. Several notions of distances between two nodes in a temporal network can be analyzed, by referring, for example, to the earliest arrival time or to the latest starting time of a temporal path connecting the two nodes. In this paper we mostly refer to the notion of temporal reachability by using the earliest arrival time. In particular, we first show how the sketch approach, which has been already used in the case of classical graphs, can be applied to the case of temporal networks in order to approximately compute the sizes of the temporal cones of a temporal network. By making use of this approach, we subsequently show how we can approximate the temporal neighborhood function (that is, the number of pairs of nodes reachable from one another in a given time interval) of large temporal networks in a few seconds. Finally, we apply our algorithm in order to analyze and compare the behavior of 25 public transportation temporal networks. Our results can be easily adapted to the case in which we want to refer to the notion of distance based on the latest starting time.

Yiannis Siglidis

10 Octobre 2019, 14:00. Salle 26-00 332, Jussieu.

The study of dynamical graphs, i.e. a data-model capable of modelling temporal-structures, becomes more and more important nowadays as the amount of data produced and the storing capabilities of the existing computational infrastructure associates information more and more with time. In their paper « Stream Graphs and Link Streams for the Modeling of Interactions over Time » of 2017, Matthieu Latapy, Tiphaine Viard, ClĂ©mence Magnien, defined a theoretical framework for modelling temporal-networks, that attempts a consistent temporal-generalisation of graph-theory. Under the founding of the ODYCCEUS(https://www.odycceus.eu/) interdisciplinary european program, a first attempt for implementing a library for stream-graphs, by Yiannis Siglidis (developer) and Robin Lamarche- Perrin (supervisor). Their implementation stands as a prototype of a generic basis that would support all existing implementations of stream-graph algorithms, while allowing enrichment and re-design of elementary data-structures. Its contribution shouldn’t only be considered as a technical one, in the sense of producing a new baseline-tool, but also as a theoretical one, as we examined and uncovered some of the immaturities of current from the view of implementation. We hope that this tool will show a small part of the computational possibilities that are provided by the formalism of stream-graphs and as so raise the importance of theoretical research in that direction as well as theoretical research around data-structures, supporting this novel approach on dynamical networks. Their current implementation supports continuous, discrete, instantaneous, weighted and unweighted instances of directed stream-graphs. The libary can be found at https://github.com/ysig/stream_graph and is licensed under GNU-GPL3.

Christian Lyngby Vestergaard

mercredi 18 décembre 2019 à 10h30, salle 24-25-405, Jussieu

Many dynamical systems can be successfully analyzed by representing them as networks. Empirically measured networks and dynamic processes that take place in these situations show heterogeneous, non-Markovian, and intrinsically correlated topologies and dynamics. This makes their analysis particularly challenging. Randomized reference models (RRMs) have emerged as a general and versatile toolbox for studying such systems. Defined as ensembles of random networks with given features constrained to match those of an input (empirical) network, they may for example be used to identify important features of empirical networks and their effects on dynamical processes unfolding in the network. RRMs are typically implemented as procedures that reshuffle an empirical network, making them very generally applicable. However, the effects of most shuffling procedures on network features remain poorly understood, rendering their use non-trivial and susceptible to misinterpretation. Here we propose a unified framework for classifying and understanding microcanonical RRMs (MRRMs). Focusing on temporal networks, we use this framework to build a taxonomy of MRRMs that proposes a canonical naming convention, classifies them, and deduces their effects on a range of important network features. We furthermore show that certain classes of compatible MRRMs may be applied in sequential composition to generate over a hundred new MRRMs from the existing ones surveyed in this article. We provide two tutorials showing applications of the MRRM framework to empirical temporal networks: 1) to analyze how different features of a network affect other features and 2) to analyze how such features affect a dynamic process in the network. We finally survey applications of MRRMs found in literature. Our taxonomy provides a reference for the use of MRRMs, and the theoretical foundations laid here may further serve as a base for the development of a principled and automatized way to generate and apply randomized reference models for the study of networked systems.

Bilel Benbouzid

8 Octobre, 2019, 11:00hrs. Salle 25-26/105, Jussieu.

Cette prĂ©sentation vise Ă rendre compte des rĂ©sultats d’une recherche sur l’espace politique et mĂ©diatique de la plateforme YouTube. Deux questions principales motivent notre Ă©tude qui se situe encore dans une phase exploratoire. Tout d’abord, YouTube n’étant pas un rĂ©seau social en tant que tel – c’est Ă la fois un rĂ©seau d’utilisateurs (chaque utilisateur est une chaĂ®ne), un espace de stockage auquel on a accès via un moteur de recherche et une plateforme de streaming qui dĂ©pend en grande partie d’un système de recommandation – comment rendre compte de sa structure et catĂ©goriser les chaĂ®nes qui la composent? Deuxièmement, nous cherchons Ă mesurer le degrĂ© de polarisation de l’espace mĂ©diatique et politique sur YouTube : l’analyse relationnelle des chaĂ®nes rĂ©vèle-t-elle une structure Ă©quivalente Ă celle de l’écosystème mĂ©diatique rĂ©vĂ©lĂ© par le MĂ©dialab de Sciences Po Ă partir d’une analyse des rĂ©seaux d’hyperliens des sites d’information et leur dynamique de circulation sur Twitter (Cardon, 2019) ? Ou bien, au contraire, les relations entre des chaĂ®nes sur YouTube fait-elle apparaĂ®tre une structure spĂ©cifique Ă l’architecture de la plateforme ? Pour rĂ©pondre Ă cette question, il faut analyser YouTube du point de vue des diffĂ©rents modes de mise en visibilitĂ© des chaĂ®nes, qu’ils soient humains ou algorithmique. C’est pourquoi, nous proposons de prĂ©senter la structuration des chaĂ®nes sur YouTube en trois dimensions qui sont autant de manières diffĂ©rentes, mais complĂ©mentaires de rendre compte de la polarisation : le rĂ©seau social qui correspond au rĂ©seau des chaĂ®nes abonnĂ©es ou recommandĂ©es par les chaĂ®nes elles-mĂŞmes ; le rĂ©seau des chaĂ®nes qui partagent des communautĂ©s de fans ; et le rĂ©seau de chaĂ®nes formĂ© par les vidĂ©os recommandĂ©es conjointement par l’algorithme. Ces trois dimensions donnent Ă voir les facettes multiples de YouTube. Alors que la première permet de comprendre la manière dont les humains se recommandent les chaĂ®nes entre eux, la seconde montre les publics partagĂ©s par les chaĂ®nes, la troisième rend compte de l’espace mĂ©diatique et politique formĂ© par une machine (la recommandation algorithmique). Dans cette phase exploratoire, nous avons comparĂ© ces trois dimensions selon la diversitĂ© des contenus qu’elles valorisent (une catĂ©gorisation des chaĂ®nes a Ă©tĂ© rĂ©alisĂ©e manuellement). Dans cette prĂ©sentation nous montrerons 1) les rĂ©seaux de chaĂ®nes qui se donnent Ă voir selon les trois dimensions, 2) les matrices de modularitĂ© en fonction des catĂ©gories de chaĂ®ne et 3) une comparaison de la distribution des catĂ©gories de chaines selon les trois dimensions Ă partir des rĂ©sultats des expĂ©riences de marche alĂ©atoire et des calculs de la perplexitĂ© qui leur sont associĂ©s. Nous montrons que les rĂ©seaux de chaĂ®nes produits par les humains (les rĂ©seaux de chaĂ®nes amis et celui des publics de fan) polarisent moins que le rĂ©seau produit par la recommandation algorithmique. Nous montrons aussi que si bulle de filtre il y a, elle ne se situe pas oĂą elle est attendue : c’est moins les contenus Ă caractère sensationnels et « complotistes » qui sont les plus recommandĂ©s par la plateforme (ce qui est souvent dĂ©noncĂ© dans le dĂ©bat public), mais les chaines de mĂ©dias traditionnels – un des effets sans doute de l’action menĂ©e par YouTube faire Ă©voluer le système de recommandation. Ces rĂ©sultats invitent Ă discuter de la nature de l’espace public que construit YouTube dans ce contexte de problème de dĂ©sinformation. YouTube semble accorder un soutien particulier aux contenus des professionnels de l’information, bien plus qu’à celui des chaĂ®nes alternatives. Dans cette discussion, nous proposons de dĂ©battre de la nature de la « dĂ©mocratie YouTube » au travers des interprĂ©tations des mĂ©triques et des modèles d’analyse de rĂ©seaux.

Olivier Alexandre

Chapter in Reconceptualising Film Policies, 2017

The nature of French audiovisual sector is determined by a layering of policies, created at various periods of time. A public policy system has been continuously developed and adapted since the 1950s, mostly focusing on the support to and defence of the artistic and moral quality of film and television programmes. This institutional system has relied on ‘qualified personalities’ emanating from diverse sectors such as cinema, television, arts, culture, education, administration and the political world. The chapter presents a sociological analysis of the French model matrix. It focuses on the revolving-door system and the policy-making personnel that have enforced a stable regulatory frame for audiovisual industries. The rise of digital operators and executives – more internationalised and engineering-solution oriented – is currently destabilising this ecosystem. There is an important generational, cultural, ideological and linguistic gap between the French ‘Media government’ and the management teams of the new players.

Armel Jacques Nzekon Nzeko’o, Maurice Tchuente and Matthieu Latapy

Journal of Interdisciplinary Methodologies and Issues in Sciences, 2019

Recommending appropriate items to users is crucial in many e-commerce platforms that containimplicit data as users’ browsing, purchasing and streaming history. One common approach con-sists in selecting the N most relevant items to each user, for a given N, which is called top-Nrecommendation. To do so, recommender systems rely on various kinds of information, like itemand user features, past interest of users for items, browsing history and trust between users. How-ever, they often use only one or two such pieces of information, which limits their performance.In this paper, we design and implement GraFC2T2, a general graph-based framework to easilycombine and compare various kinds of side information for top-N recommendation. It encodescontent-based features, temporal and trust information into a complex graph, and uses personal-ized PageRank on this graph to perform recommendation. We conduct experiments on Epinionsand Ciao datasets, and compare obtained performances using F1-score, Hit ratio and MAP eval-uation metrics, to systems based on matrix factorization and deep learning. This shows that ourframework is convenient for such explorations, and that combining different kinds of informationindeed improves recommendation in general.

Thibaud Arnoux, Lionel Tabourier and Matthieu Latapy

Dynamics On and Of Complex Networks III, pp 135-150, 2019

Aurore Payen, Lionel Tabourier and Matthieu Latapy

PLOS ONE, 2019

Infections can spread among livestock notably because infected animals can be brought to uncontaminated holdings, therefore exposing a new group of susceptible animals to the dis- ease. As a consequence, the structure and dynamics of animal trade networks is a major focus of interest to control zoonosis. We investigate the impact of the chronology of animal trades on the dynamics of the process. Precisely, in the context of a basic SI model spread- ing, we measure on the French database of bovine transfers to what extent a snapshot- based analysis of the cattle trade networks overestimates the epidemic risks. We bring into light that an analysis taking into account the chronology of interactions would give a much more accurate assessment of both the size and speed of the process. For this purpose, we model data as a temporal network that we analyze using the link stream formalism in order to mix structural and temporal aspects. We also show that in this dataset, a basic SI spread- ing comes down in most cases to a simple two-phases scenario: a waiting period, with few contacts and low activity, followed by a linear growth of the number of infected holdings. Using this portrait of the spreading process, we identify efficient strategies to control a potential outbreak, based on the identification of specific elements of the link stream which have a higher probability to be involved in a spreading process.

Nicolas Hervé

9 Juillet, 2019, 11:00hrs. Salle 26-00/332, Jussieu.

OTMedia (Observatoire TransMedia) est une plateforme logicielle dĂ©diĂ©e aux projets de recherche qui permet d’analyser de grandes quantitĂ©s de donnĂ©es diverses, multimodales, transmĂ©dia liĂ©es Ă l’actualitĂ© française et francophone. OTMedia collecte, traite et indexe en permanence des milliers de flux provenant de la tĂ©lĂ©vision, de la radio, du Web, de la presse, des agences de presse et de Twitter. Dans le contexte de ce projet, nous souhaiterions Ă©tudier la propagation d’informations et d’images sur le Web en utilisant la thĂ©orie des graphes pour nous aider Ă extraire les caractĂ©ristiques/indicateurs pour dĂ©crire les Ă©vĂ©nements mĂ©diatiques.

Audrey Wilmet, Tiphaine Viard, Matthieu Latapy and Robin Lamarche-Perrin

Computer Networks, 2019

This paper aims at precisely detecting and identifying anomalous events in IP traffic. To this end, we adopt the link stream formalism which properly captures temporal and structural features of the data. Within this framework, we focus on finding anomalous behaviours with respect to the degree of IP addresses over time. Due to diversity in IP profiles, this feature is typically distributed heterogeneously, preventing us to directly find anomalies. To deal with this challenge, we design a method to detect outliers as well as precisely identify their cause in a sequence of similar heterogeneous distributions. We apply it to several MAWI captures of IP traffic and we show that it succeeds in detecting relevant patterns in terms of anomalous network activity.

Daniel Archambault

May 27th, 11h Room 24-25-405. UPMC – Sorbonne UniversitĂ©. 4 Place de Jussieu, 75005 Paris.

One of the most important types of data in data science is the graph or network. Networks encode relationships between entities: people in social network, genes in biological network, and many others forms of data. These networks are often dynamic and consist of a set of events — edges/nodes with individual timestamps. In the complex network literature, these networks are often referred to as temporal networks. As an example, a post to a social media service creates an edge existing at a specific time and a series of posts is a series of such events. However, the majority of dynamic graph visualisations use the timeslice, a series of snapshots of the network at given times, as a basis for visualisation. In this talk, I present two recent approaches for event-based network visualisation: DynNoSlice and the Plaid. DynNoSlice is a method for embedding these networks directly in the 2D+t space-time cube along with methods to explore the contents of the cube. The Plaid is an interactive system for visualising long in time dynamic networks and interaction provenance through interactive timeslicing.

Hong-Lan Botterman and Robin Lamarche-Perrin

CompleNet, 2019

Heterogeneous information networks (HIN) are abstract representations of systems composed of multiple types of entities and their relations. Given a pair of nodes in a HIN, this work aims at recovering the exact weight of the incident link to these two nodes, knowing some other links present in the HIN. Actually, this weight is approximated by a linear combination of probabilities, results of path-constrained random walks i.e., random walks where the walker is forced to follow only a specific sequence of node types and edge types which is commonly called a meta path, performed on the HIN. This method is general enough to compute the link weight between any types of nodes. Experiments on Twitter data show the applicability of the method.

Audrey Wilmet and Robin Lamarche-Perrin

CompleNet, 2019

We introduce a method which aims at getting a better understanding of how millions of interactions may result in global events. Given a set of dimensions and a context, we find different types of outliers: a user during a given hour which is abnormal compared to its usual behavior, a relationship between two users which is abnormal compared to all other relationships, etc. We apply our method on a set of retweets related to the 2017 French presidential election and show that one can build interesting insights regarding political organization on Twitter.

Lionel Tabourier, Daniel F. Bernardes, Anne-Sophie Libert and Renaud Lambiotte

Machine Learning, 2019

Uncovering unknown or missing links in social networks is a difficult task because of their sparsity and because links may represent different types of relationships, characterized by different structural patterns. In this paper, we define a simple yet efficient supervised learning-to-rank framework, called RankMerging, which aims at combining information provided by various unsupervised rankings. We illustrate our method on three different kinds of social networks and show that it substantially improves the performances of unsupervised methods of ranking as well as standard supervised combination strategies. We also describe various properties of RankMerging, such as its computational complexity, its robustness to feature selection and parameter estimation and discuss its area of relevance: the prediction of an adjustable number of links on large networks.

Vsevolod Salnikov

jeudi 4 avril 2019, 14h, salle 26-00/332, LIP6, Sorbonne Université

In this talk I will present various data-oriented projects we have done recently. The general line will focus on human mobility sensing and different applications of such datasets from more theoretical ones towards extremely applied, which are on the border of research and commercial activities.Moreover we will discuss different stages: from data collection towards models and application as well as the ‘in-the-field’ validation of model predictions. I will propose few ways of data collection, which permitted to get impressive and reliable datasets with almost no cost. These datasets are already used for studies, but I would be also happy to discuss various applications and ways to collaborate!

Pablo Rauzy

Vendredi 12 avril 2019, 11h, salle 25-26/105, LIP6, Sorbonne Université

Du point de vue d’un utilisateur ou d’une utilisatrice d’un système d’informations, la privacy correspond au contrĂ´le qu’il ou elle peut exercer sur ses donnĂ©es personnelles dans ce système. Cette vision de la privacy est essentielle si l’on veut contribuer au dĂ©veloppement de technologies Ă©mancipatrices, c’est Ă dire aux services de leurs utilisateurs et utilisatrices seulement. L’Ă©tude et l’Ă©valuation rigoureuse de la privacy offerte par un système nĂ©cessite donc une caractĂ©risation formelle de ce contrĂ´le. Nous proposons un cadre formel basĂ© sur des capacitĂ©s qui permet de spĂ©cifier et de raisonner sur ce contrĂ´le et ses propriĂ©tĂ©s. Nous verrons au travers d’exemples que cela permet notamment la comparaison de mises en oeuvre alternatives d’un mĂŞme système (un rĂ©seau social basique dont nous comparons trois implĂ©mentations possibles), et donc la possibilitĂ© d’Ă©tudier et d’optimiser la privacy dès la phase de conception.