Stephany Rajeh , Hocine Cherifi

Rajeh S, Cherifi H (2024) On the role of diffusion dynamics on community-aware centrality measures. PLoS ONE 19(7): e0306561. https://doi.org/10.1371/journal.pone.0306561

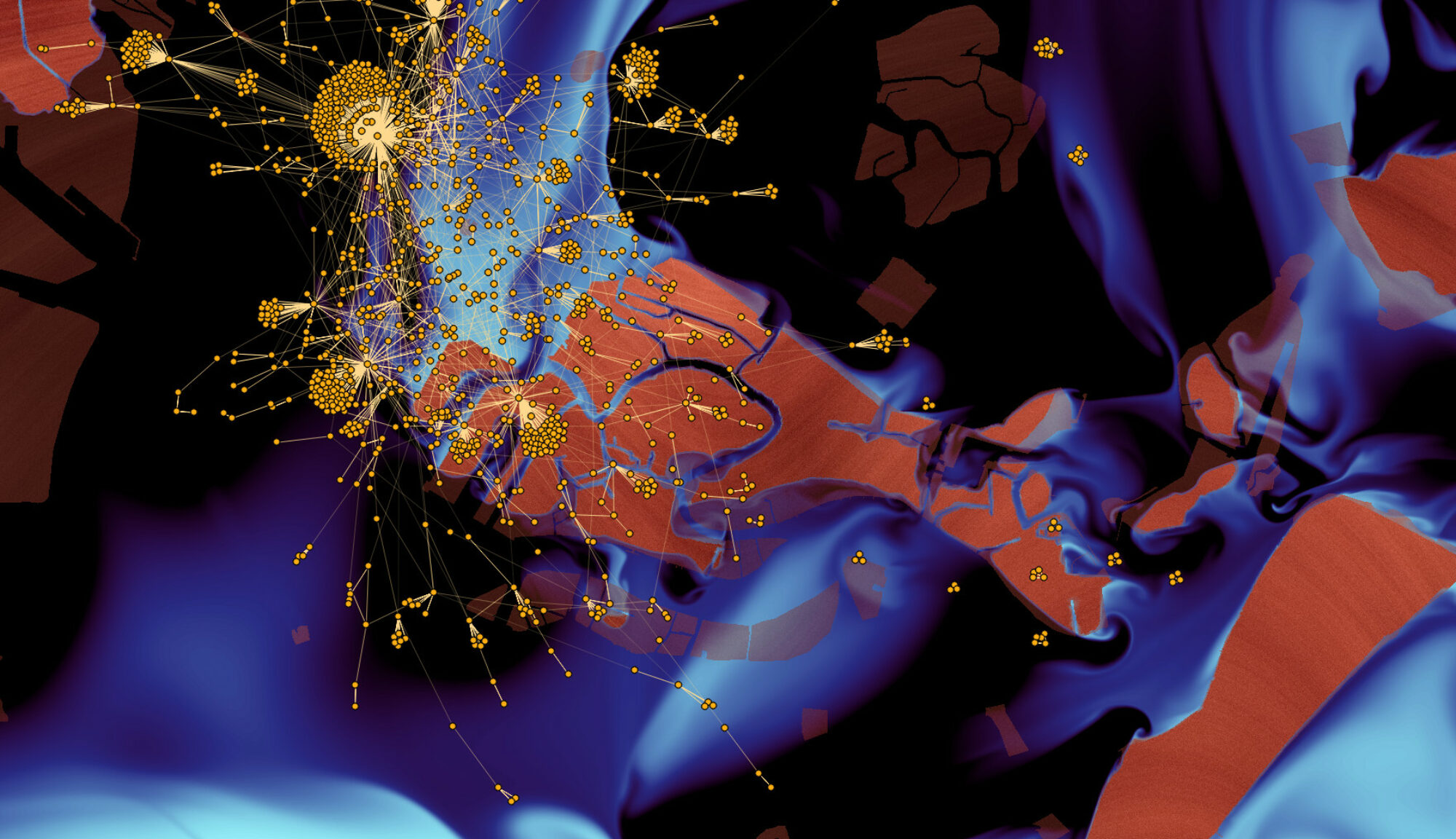

Theoretical and empirical studies on diffusion models have revealed their versatile applicability across different fields, spanning from sociology and finance to biology and ecology. The presence of a community structure within real-world networks has a substantial impact on how diffusion processes unfold. Key nodes located both within and between these communities play a crucial role in initiating diffusion, and community-aware centrality measures effectively identify these nodes. While numerous diffusion models have been proposed in literature, very few studies investigate the relationship between the diffusive ability of key nodes selected by community-aware centrality measures, the distinct dynamical conditions of various models, and the diverse network topologies. By conducting a comparative evaluation across four diffusion models, utilizing both synthetic and real-world networks, along with employing two different community detection techniques, our study aims to gain deeper insights into the effectiveness and applicability of the community-aware centrality measures. Results suggest that the diffusive power of the selected nodes is affected by three main factors: the strength of the network’s community structure, the internal dynamics of each diffusion model, and the budget availability. Specifically, within the category of simple contagion models, such as SI, SIR, and IC, we observe similar diffusion patterns when the network’s community structure strength and budget remain constant. In contrast, the LT model, which falls under the category of complex contagion dynamics, exhibits divergent behavior under the same conditions.