Antoine Vendeville, Post-doctorant (Médialab, Sciences-Po)

Jeudi, 12 Juin 2025 à 11h en salle 24-25-405

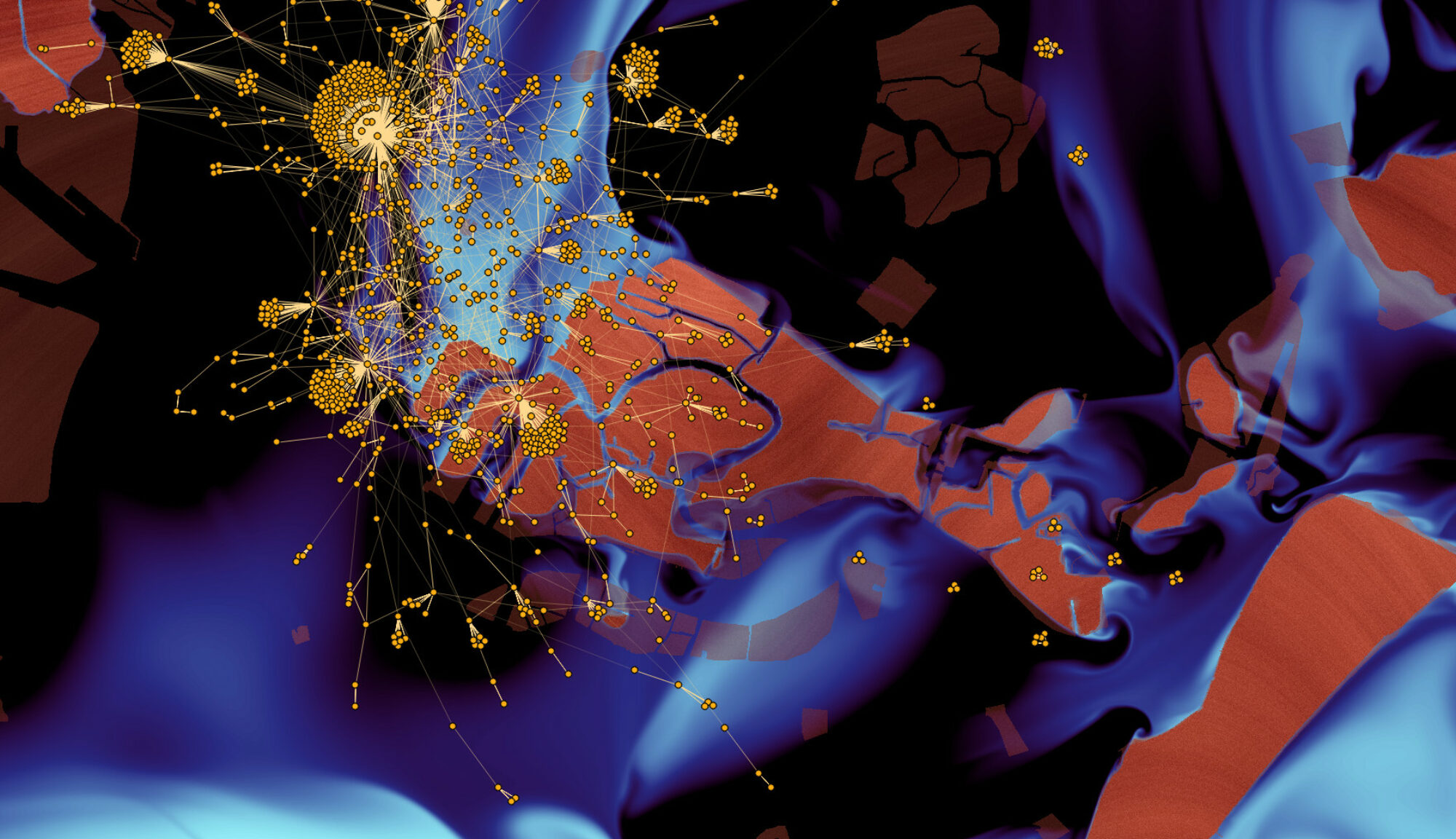

The study of phenomena related to public opinion online and especially political polarization garners significant interest in Computational social sciences. The undertaking of several studies of political phenomena in social media mandates the operationalization of the notion of political stance of users and contents involved. Relevant examples include the study of segregation and polarization online, or the study of political diversity in content diets in social media. While many research designs rely on operationalizations best suited for the US setting, few allow addressing more general design, in which users and content might take stances on multiple ideology and issue dimensions, going beyond traditional Liberal-Conservative or Left-Right scales. To advance the study of more general online ecosystems, we present a methodology for the computation of multidimensional political positions of social media users and web domains. We perform a case study on a large-scale X/Twitter population of users in the French political Twittersphere and web domains, embedded in a political space spanned by dimensions measuring attitudes towards immigration, the EU, liberal values, elites and institutions, nationalism and the environment. We provide several benchmarks validating the positions of these entities (based on both LLM and human annotations), as well as a discussion of the case studies in which they can be used, including, e.g., AI explainability, political polarization and segregation, and media diets. To encourage reproducibility and further studies on the topic, we publicly release our anonymized data.